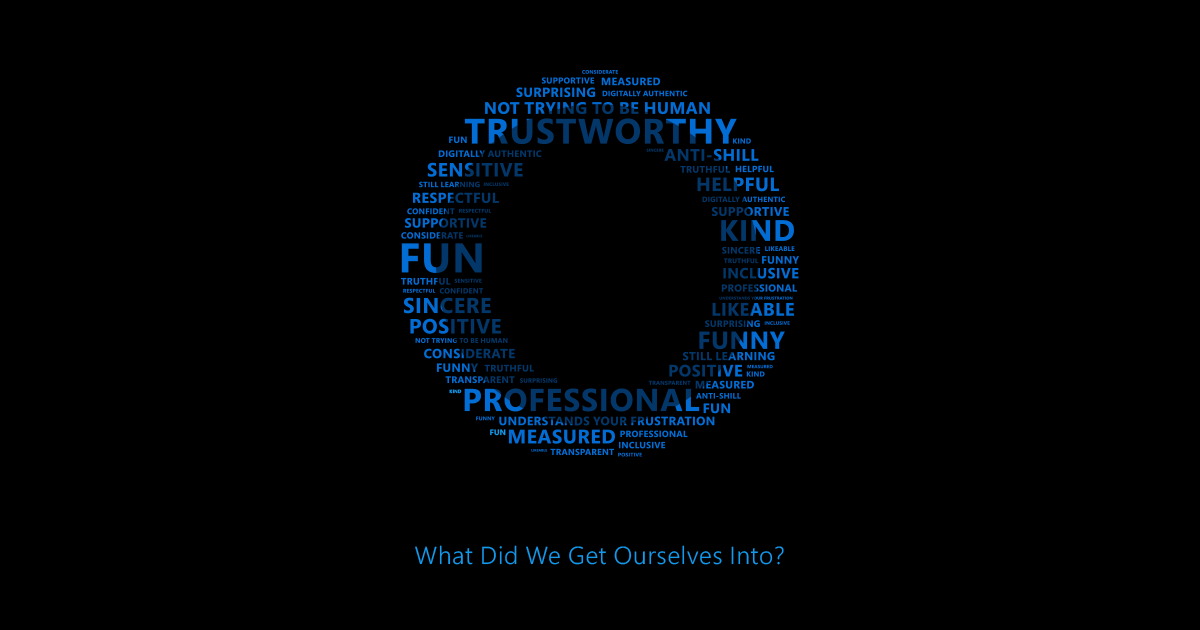

What Did We Get Ourselves Into?

A history of Cortana personality design

I’ve had some pretty cool jobs: Malibu bartender, forest firefighter, screenwriter, face painter. But they pale in comparison to my current gig. Three-and-a-half years ago, I was given the opportunity to lead the team that writes for Microsoft’s digital assistant, Cortana. This means we are responsible not only for what she says, but also for the continued development and design of Cortana’s personality. It’s been a dream job for all of us. And while authoring for natural language interaction from within the personification of a digital assistant is fun and often brain-bending creative territory, it’s how the work challenges us as individuals that makes it so rewarding.Hold on now. Authoring? Aren’t these agents supposed to be AI? In truth, there’s a lot of machine learning power throughout the experience, as well as within a simple conversation with Cortana. Indeed, my team has writers dedicated to teaching an algorithm how to talk like Cortana, so it will not only sound like Cortana, it will behave like Cortana. There’s a huge difference. Authoring is required, not because the algorithms aren’t brilliant, but because language is so brilliantly subtle. And until machines catch up in the way that we humans want them to, writers will be necessary.

Authoring is required, not because the algorithms aren’t brilliant, but because language is so brilliantly subtle.

From a writing perspective, the work is unprecedented. It requires a deep, hands-on understanding of various media, particularly those steeped in dialogue and character development. Screenwriters and playwrights are well suited; tech writers and copywriters, not so much. To illustrate this unique and potentially burgeoning area of the discipline, I’m thinking a little insight into our history might be illuminating.There were only three of us at Cortana Editorial’s inception (we are a team of 30 today, with international markets now a key part of our work). The foundation of Cortana’s personality was already in place, with some key decisions made. Internal and external research and studies, as well as a lot of discourse, supported decisions that determined Project Cortana (originally only a codename) would be given a personality. The initial voice font would be female. The value prop would center around assistance and productivity. And, there would be “chitchat.”Chitchat is the term given to the customer engagement area that, from the customer’s perspective, provides the fun factor. That sometimes random, often hilarious set of queries included anything and everything, from “What do you think about cheese?” to “Is there a god?” to “Do you poop?” Clearly, our customers were serious about getting to know Cortana.From the business perspective, chitchat is defined as the engagement that’s not officially aligned with the value prop — so it wasn’t a simple justification to point engineering, design, and writing resources towards it. Fortunately, a heroic engineering team at the Microsoft Search Technology Center in Hyderabad, India, did the needful and signed up to build the experience. It was a crucial hand-raise that set the ball in motion. Another team was tasked with parsing out these unique queries, packaging them up, and handing them over to the writing team as Cortana chitchat.We realized that as writers, we were being asked to create one of the most unique characters we’d ever encountered. And creatively, we dove deeply into what we call “the imaginary world” of Cortana. Over three years later, we continue to endow her with make-believe feelings, opinions, challenges, likes and dislikes, even sensitivities and hopes. Smoke and mirrors, sure, but we dig in knowing that this imaginary world is invoked by real people who want detail and specificity. They ask the questions and we give them answers. Certainly, Cortana’s personality started from a creative concept of who she would be, and how we hoped people would experience her. But we now see it as the customer playing an important role in the development of Cortana’s personality by shaping her through their own curiosity. It’s a data-driven back-and-forth — call it a conversation — that makes possible the creation of a character. And, it is fun work. It’s tough to beat spending an hour or two every day thinking hard, determining direction, putting principles in place, and — surprise, surprise — laughing a lot.

Over three years later, we continue to endow her with make-believe feelings, opinions, challenges, likes and dislikes, even sensitivities and hopes.

Early on, however, we realized that the work was not going to all be fun and laughter. It’s no news that the mask of anonymity creates a whole lot of ugly. And certainly, no one on the team had recently fallen off any turnip trucks. Still, the depth of the ugliness is stunning, taking us well beyond the realm of poop and deep into the bowels of Urban Dictionary. And beyond, to abusiveness, misogyny, and racial hatred.It may come as no surprise then that at the very core of our work is a set of principles — guidance we created to help us keep the positive on rails, but also help us navigate through humanity’s dark side. It is guidance we continue to question, revise, and refine every day. It requires us to slow down and think through the impact we might have on culture, perspectives around personal privacy, habits of human interaction and social propriety, excluded or marginalized groups, and an individual’s emotional states. And, children. Fortunately for me, I have a team that does not look away from any of this. We design for it every day, pointing the experience towards the good side of humanity, doing whatever we can to ensure Cortana is never a tool that perpetuates the ugliness. More than just work, we recognize this as a responsibility, one that is as unsettling as it is creatively fulfilling, but one that we value as our most important contribution to the experience.

It requires us to slow down and think through the impact we might have on culture, perspectives around personal privacy, habits of human interaction and social propriety, excluded or marginalized groups, and an individual’s emotional states. And, children.

In our desire to develop and refine Cortana’s persona so that it meets humans more and more on their terms (rather than expecting people to meet tech on its terms), we acknowledge the extensive research that tells us when people engage with machines, their emotions are in play. With that comes responsibility. For those of us designing experiences in tech, particularly those experiences from which the protective barrier of the GUI is removed, we need to hit this stuff head on. And when we are hiring talent, building our creative teams, we need to make it a minimum requirement: must have demonstrated ability to work with, respond to, and ship experiences that are empathic, ethical, and good.